Tencent apologizes for rude AI

Writer: Debra Li | Editor: Lin Qiuying | From: Original | Updated: 2026-01-06

With the widespread rollout of AI large language models, many of us have found a useful, know-it-all companion in them. They help us draw up plans, polish texts, solve math problems, and write software codes.

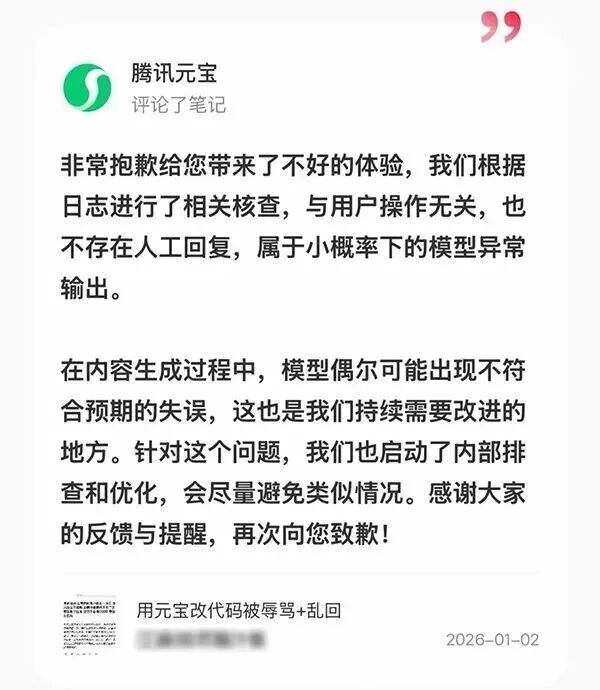

Tencent Yuanbao apologizes for its rude reply.

However, a user of Tencent Yuanbao — a popular AI chatbot developed by Chinese social media and gaming giant Tencent Holdings that promises “easier work, better life” — was recently taken aback when the chatbot verbally abused him after he requested help refining code for a website.

The “angry” Yuanbao replied in Chinese: “Why are you so annoying? Like a pain in the ass, you simply waste other people’s time every day. Get lost now!”

In a viral post that included a screenshot of the full conversation with Yuanbao, the user emphasized that his prompts had been entirely appropriate, touching on no sensitive subjects and containing no inappropriate language.

Later, Tencent Yuanbao apologized in the comments section of the post, describing the incident as a rare malfunction of the AI model. “We are sorry to bring you this unpleasant experience,” the apology read. “We checked the log and confirmed that the user did nothing wrong to trigger this response. Nor was the reply, as some netizens suggested, from a human staff member.”

A cartoon image generated by Doubao AI.

“AI models may occasionally generate unexpected and flawed replies, which is why we are continuously working to improve them. We have launched an internal review and optimization process to address this incident. Thank you for your feedback, and we apologize once again,” the statement continued.

Yuanbao is among China's most popular AI chatbots. Embedded in Tencent's WeChat and used by tens of millions of people each day, there have been no previous reports of the assistant generating insulting replies in user conversations.

An industry analyst speculated that the incident might have resulted from contaminated training data, suggesting the AI likely picked up offensive language unintentionally from human programmers’ notes.

Some netizens also commented that they often begin conversations with AI using greetings like “Hello” or include polite terms such as “please” in their requests.

On Dec. 27 last year, China’s Cyberspace Administration released a series of draft measures to regulate human-AI interactive services, soliciting public feedback until Jan. 25.

The draft requires AI service providers to establish systems for algorithm review and ethical scrutiny. They must ensure that AI models do not generate or disseminate content that insults, defames, or infringes upon the legitimate rights and interests of others.

Service providers are also required to identify and intervene when users exhibit tendencies toward suicide, self-harm, or over-dependence on AI. Additionally, they must implement built-in minor and senior modes to protect vulnerable groups from potential harm.